Spotting Deepfakes: An Essential Skill

Spotting Deepfakes: An Essential Skill

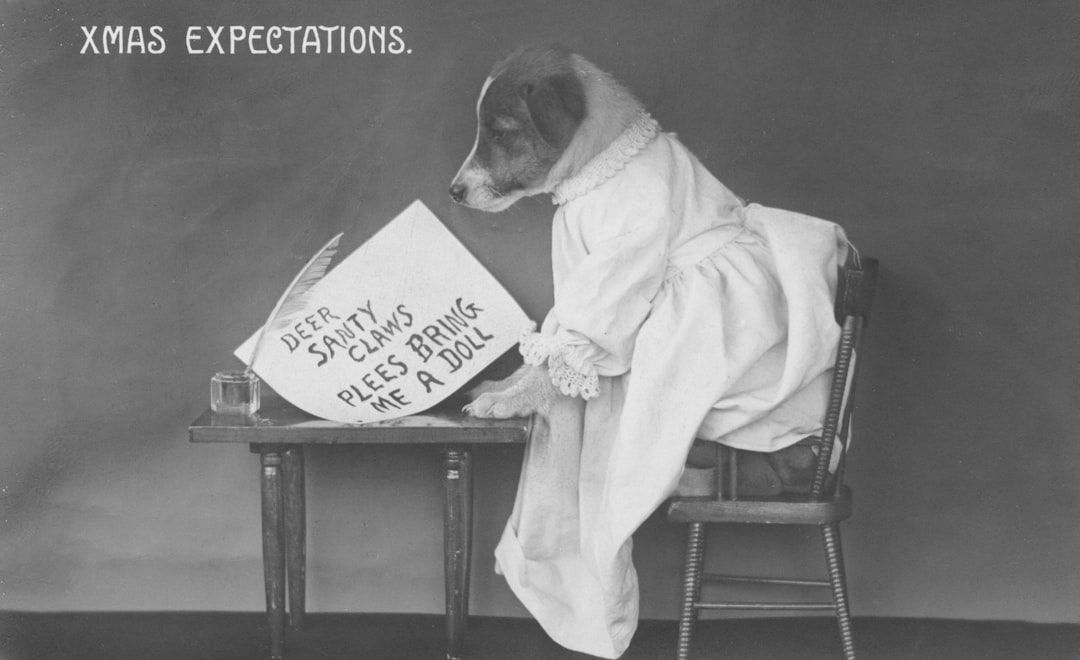

Your professor shares a video in class. A politician admits to something shocking. The clip looks real-perfect lighting, natural movements, convincing audio. But here’s the thing: it never happened.

Deepfakes have moved beyond obvious fakes with weird blinking patterns. The technology now produces content that fools even careful observers. As a college student consuming hours of digital media daily, you need practical skills to separate authentic content from AI-generated fabrications.

This isn’t about becoming paranoid - it’s about becoming informed.

What Makes Modern Deepfakes So Convincing

Before you can spot fakes, understand what you’re dealing with. Deepfake technology uses neural networks trained on thousands of images to generate synthetic media. Early versions struggled with details-eyes that didn’t blink, edges that blurred, audio that sounded robotic.

Those days are gone.

Current deepfake generators handle most of these tells. They’ve learned to simulate natural blinking. They match lip movements to audio with frightening accuracy. Some can generate entirely fictional people who never existed.

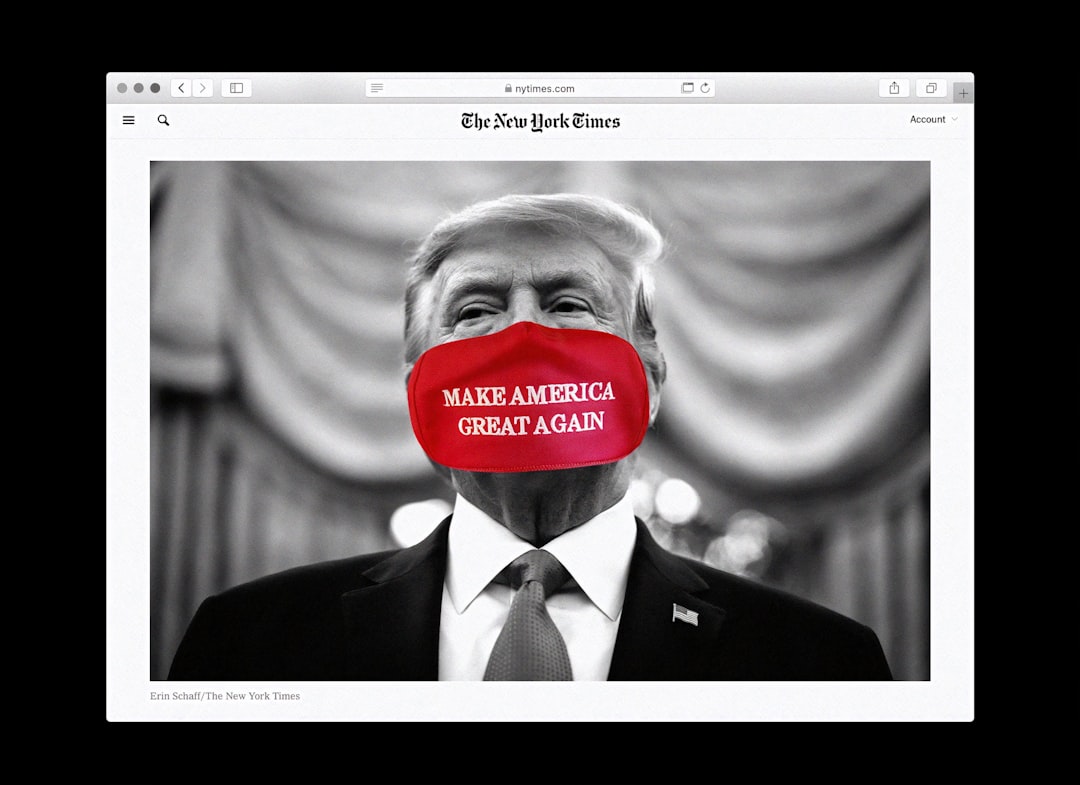

The most dangerous fakes aren’t the obvious ones. They’re the subtle manipulations-a quote slightly altered, a context shifted, a reaction fabricated. These slip past our defenses because they seem plausible.

Step 1: Check the Source Before Anything Else

Start with the basics - where did this content originate?

Trace the media back to its earliest appearance online. Tools like Google Reverse Image Search or TinEye let you find where an image first showed up. For videos, try InVID or WeVerify-browser extensions designed for verification.

Look for:

- Original upload location: Did this first appear on a verified news outlet or a random social account? - Account age and history: New accounts sharing viral “breaking news” deserve skepticism

- Cross-referencing: Are reputable sources covering this?

Here’s a real example: In 2023, a viral video showed a celebrity endorsing a crypto scheme. Reverse searching revealed the original footage came from an interview about an unrelated topic. The audio had been completely fabricated.

Two minutes of source-checking would have caught it.

Step 2: Examine Visual Inconsistencies

Your eyes can catch what your brain might miss-if you know where to look.

**Focus on the edges. ** Where the face meets hair, background, or clothing, deepfakes often struggle.

**Study the lighting. ** Light behaves predictably in real footage.

**Watch at different speeds. ** Slow the video to 0. 25x. Frame-by-frame viewing reveals glitches invisible at normal speed.

**Pay attention to teeth and tongue. ** These remain difficult for AI to render convincingly. Teeth might look too uniform, too blurry, or shift shape during speech.

Step 3: Listen Critically to Audio

Audio deepfakes have improved dramatically, but imperfections remain.

Use headphones - seriously. Laptop speakers miss subtle artifacts that headphones reveal.

Listen for:

- Breathing patterns: Real speech includes natural breaths. Synthetic audio often sounds too clean

- Room acoustics: Does the audio match the visual environment? A crowded room should have ambient noise

- Emotional consistency: Does the vocal emotion match facial expressions and body language?

One trick: compare against verified audio of the same person. Pull up an authenticated interview or official video. Does the speech pattern match - the cadence? Minor verbal habits?

Step 4: Use Detection Tools (But Don’t Rely on Them Completely)

Several AI-powered tools claim to detect deepfakes. They help, but they’re not foolproof.

Free options for students:

- Deepware Scanner: Analyzes videos for manipulation signs

- Microsoft Video Authenticator: Provides confidence scores for media authenticity

- Sensity AI: Offers detection for various synthetic media types

- FotoForensics: Reveals image manipulation through error level analysis

Here’s the catch: detection tools and creation tools are in an arms race. A tool that catches today’s deepfakes might miss tomorrow’s. Use them as one input, not the final verdict.

Run multiple tools. If three different detectors flag content as suspicious, that’s meaningful. If only one does, investigate further manually.

Step 5: Apply the Plausibility Test

Step back from technical analysis. Ask yourself:

Does this make sense?

- Would this person actually say this? - Does this align with their known positions and behavior? - What would they gain or lose from this statement? - Who benefits from this content existing?

**Consider timing. ** Deepfakes often appear during elections, controversial events, or moments of public tension. Content surfacing at suspiciously convenient times deserves extra scrutiny.

**Check the extraordinary claim threshold. ** The more shocking the content, the more verification it needs. A video of a politician making routine comments requires less skepticism than one showing them confessing to crimes.

Common Mistakes to Avoid

**Trusting verification badges too much - ** Verified accounts get hacked. They also share unverified content. The blue checkmark means the account is authentic, not that everything it posts is true.

**Assuming older content is safe. ** Deepfakes can be created from historical footage. A “newly surfaced” video from years ago might be a recent fabrication.

**Falling for the “too good to be true” trap. ** Content that perfectly confirms your existing beliefs deserves the most scrutiny, not the least. Confirmation bias is exactly what disinformation exploits.

**Sharing before verifying. ** Once you share manipulated content, you become part of its distribution network. The five minutes you spend checking could prevent spreading a lie to hundreds of people.

When You’re Still Unsure

Sometimes verification efforts hit dead ends. That’s okay.

1 - **Don’t share it. ** If you can’t verify it, don’t amplify it 2. **Wait for confirmation. ** Major stories get picked up by multiple outlets. Give it 24-48 hours 3 - **Flag it. ** Most platforms have reporting mechanisms for suspected manipulated media 4. **Ask experts.

Building Long-Term Media Literacy

Spotting deepfakes isn’t a one-time skill. It’s an ongoing practice.

Follow researchers and journalists who cover synthetic media. Subscribe to fact-checking newsletters. Stay updated on how the technology evolves.

And cultivate healthy skepticism-not cynicism. The goal isn’t to distrust everything. It’s to verify before you trust, especially when content provokes strong emotional reactions.

The students who develop these skills now will navigate a media environment their parents couldn’t have imagined. You’re not just protecting yourself from being fooled. You’re building the critical thinking muscles that matter for every aspect of life.

Start practicing today. The next deepfake you encounter might be targeting you.