Advanced Prompt Engineering for Academic Research

Advanced Prompt Engineering for Academic Research

You’ve probably typed a question into ChatGPT, gotten a mediocre answer, and wondered what you did wrong. Nothing, really - but you could do better.

Prompt engineering isn’t magic - it’s a skill. And for academic research, it’s becoming as essential as knowing how to use a library database.

Why Your Research Prompts Fall Flat

Most students treat AI tools like Google. They type a question, hit enter, and hope for the best. That approach wastes time and produces shallow results.

Here’s the thing: AI models respond to context. The more specific context you provide, the more useful the output. A prompt like “tell me about climate change” gives you a generic overview. A prompt like “summarize three peer-reviewed studies from 2022-2024 examining coral bleaching rates in the Great Barrier Reef, focusing on method differences” gets you somewhere useful.

The difference - constraints. Specificity - direction.

Step 1: Define Your Research Question First

Before you touch an AI tool, write down exactly what you’re trying to learn. This sounds obvious - most people skip it anyway.

Take five minutes to answer:

- What specific aspect of this topic am I investigating? - What type of sources do I need (empirical studies, theoretical frameworks, case analyses)? - What time period matters for my research? - What geographic or demographic scope applies?

Don’t open ChatGPT until you can state your research question in one clear sentence. “I want to understand how social media algorithms affect political polarization among voters aged 18-25 in swing states during the 2024 election cycle. " That’s a research question. “Tell me about social media and politics” is not.

Step 2: Structure Your Prompts Using the RICE Framework

RICE stands for Role, Instructions, Context, and Examples. Stack these elements to build prompts that actually work.

Role: Tell the AI what expertise to adopt. “You are a research librarian specializing in environmental science databases” produces different results than no role specification.

Instructions: Be direct about what you want. Use action verbs - “Identify,” “compare,” “summarize,” “critique,” “list.

Context: Include your academic level, the purpose of your research, any constraints from your assignment, and relevant background.

Examples: When possible, show the AI what good output looks like. “Format your response like this example: [paste example].

A full RICE prompt might look like:

“You are an academic research assistant with expertise in cognitive psychology. I’m an undergraduate writing a literature review on working memory capacity and multitasking performance. Identify five landmark studies from 2010-2023 that established key findings in this area. For each study, provide: authors, year, sample size, main finding, and one limitation. Format as a bulleted list.

That prompt works because it leaves nothing to chance.

Step 3: Use Iterative Refinement

Your first prompt rarely produces your best result. Treat prompt engineering as a conversation, not a single query.

Start broad, then narrow. Ask for an overview, identify the most relevant angle, then request deeper analysis of that specific aspect. Each round of interaction adds context the AI can use.

Try this sequence:

- “Give me a 200-word overview of current debates in [your topic]. "

- “The second point you mentioned interests me. Expand on that with specific researchers and their positions. "

- “Compare Smith’s 2021 findings with the earlier work you mentioned. Where do they agree - where do they conflict?

This iterative approach mimics how experienced researchers actually work. You don’t find your thesis in the first source you read. You follow threads.

Step 4: Verify Everything

AI models hallucinate - they invent citations. They attribute quotes to wrong authors. They mix up dates and data.

Never cite anything an AI tells you without independent verification. Use AI outputs as starting points for your own database searches, not as final sources.

Build verification into your workflow:

- When AI mentions a study, search for it in Google Scholar or your university database

- Cross-reference statistics with primary sources

- Check author names against actual publication records

- Treat AI summaries as hypotheses to test, not facts to accept

This sounds paranoid - it’s not. A single fabricated citation in your paper can tank your credibility.

Troubleshooting Common Problems

**Problem: Responses are too generic - ** Fix: Add constraints. Specify time periods, methodologies, theoretical frameworks, or geographic regions. Limit the scope until the AI can’t give you boilerplate.

**Problem: AI keeps giving you information you already know. ** Fix: State what you already understand. “I know the basics of [concept]. I specifically need to understand [advanced aspect] for an upper-division course.

**Problem: Citations look suspicious - ** Fix: They probably are. Ask the AI: “Are these real publications? Provide DOIs if available - " Then verify independently.

**Problem: Responses contradict each other across sessions. ** Fix: AI models don’t have perfect consistency. When you get contradictory information, that’s a signal you need to consult actual sources rather than relying on AI summaries.

Advanced Techniques Worth Trying

Once you’ve mastered basics, experiment with these approaches:

Chain-of-thought prompting: Ask the AI to explain its reasoning step by step. “Walk me through how you would search for sources on this topic. What databases would you check? What search terms would you use? What filters would you apply? " This surfaces method you can adapt.

Comparative analysis prompts: “Compare how a researcher in [Field A] would approach this question versus a researcher in [Field B]. What different methods and assumptions would each bring?

Gap identification: “Based on the research landscape in [topic], what questions remain unanswered? What methodological approaches haven’t been tried? " This can spark original thesis directions.

Format specification: “Structure your response as an annotated bibliography entry” or “Write this as an abstract following APA guidelines. " Matching output format to your actual needs saves editing time.

What AI Can’t Do For You

Prompt engineering won’t replace critical thinking. AI can help you find information faster and organize it more efficiently. It can’t evaluate whether an argument is sound. It can’t determine if a method was appropriate for a research question. The result can’t decide what matters.

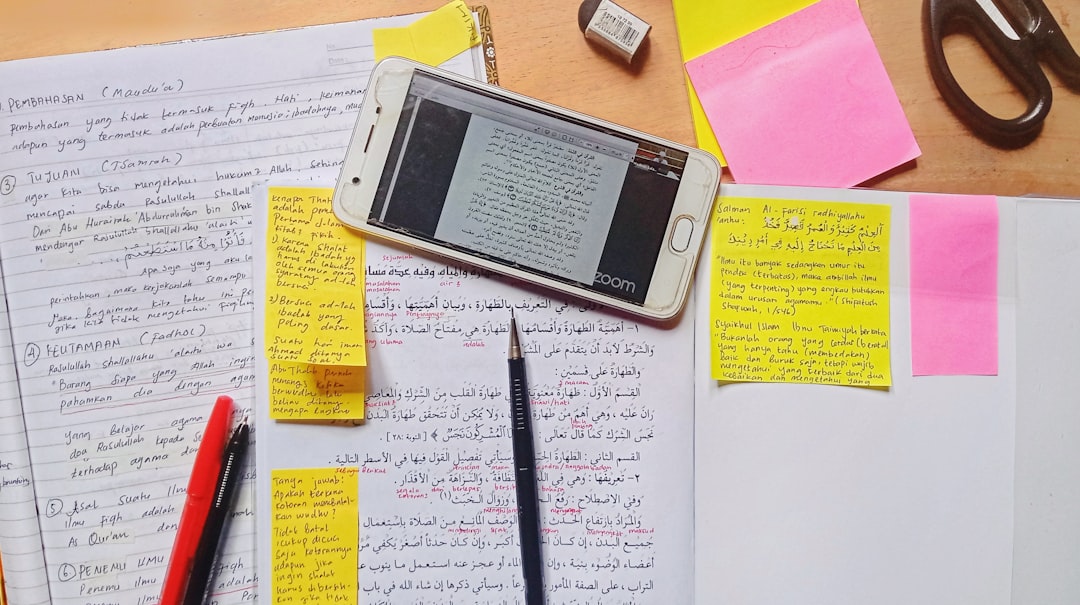

Those judgments remain yours. Use AI to accelerate the mechanical parts of research-finding sources, summarizing long texts, organizing notes, generating initial outlines. Keep the intellectual work for yourself.

That’s not a limitation - that’s the point. Your degree certifies that you can think, not that you can operate software.

Start Practicing Today

Pick a topic from one of your current courses. Spend 30 minutes crafting prompts using the RICE framework. Compare your results to what you’d get from a quick, unstructured query.

The difference will be obvious. And once you see it, you won’t go back to lazy prompting.

Good research has always required good questions. AI just makes that truth harder to ignore.